How to Download Git Repository Again

Git is a fantastic selection for tracking the evolution of your code base and collaborating efficiently with your peers. But what happens when the repository you want to track is reallyactually large?

In this post I'll requite you some techniques for dealing with information technology.

2 categories of big repositories

If yous think about it in that location are broadly 2 major reasons for repositories growing massive:

- They accumulate a very very long history (the projection grows over a very long menses of time and the luggage accumulates)

- They include huge binary assets that need to be tracked and paired together with lawmaking.

…or it could be both.

Sometimes the second blazon of trouble is compounded by the fact that old, deprecated binary artifacts are still stored in the repository. But there'south a moderately piece of cake – if annoying – fix for that (see below).

The techniques and workarounds for each scenario are dissimilar, though sometimes complementary. So I'll comprehend them separately.

Cloning repositories with a very long history

Even though threshold for a qualifying a repository as "massive" is pretty high, they're still a hurting to clone. And you can't always avoid long histories. Some repos take to be kept in tact for legal or regulatory reasons.

Simple solution: git shallow clone

The offset solution to a fast clone and saving developer's and system'due south time and disk space is to copy only recent revisions. Git's shallow clone selection allows y'all to pull down only the latest northward commits of the repo'due south history.

How practise you do it? Merely apply the –depth pick. For example:

git clone --depth [depth] [remote-url]

Imagine you accumulated ten or more years of projection history in your repository. For example, we migrated Jira (an xi year-old code base) to Git. The time savings for repos like this can add up and be very noticeable.

The full clone of Jira is 677MB, with the working directory being another 320MB, made up of more than 47,000+ commits. A shallow clone of the repo takes 29.v seconds, compared to four minutes 24 seconds for a full clone with all the history. The benefit grows proportionately to how many binary assets your project has swallowed over time.

Tip: Build systems continued to your Git repo benefit from shallow clones, likewise!

Shallow clones used to exist somewhat dumb citizens of the Git earth every bit some operations were barely supported. Only contempo versions (1.9 and above) have improved the situation greatly, and you can properly pull and push to repositories even from a shallow clone now.

Surgical solution: git filter branch

For the huge repositories that accept lots of binary cruft committed by mistake, or old assets not needed anymore, a great solution is to utilisegit filter-branch. The control lets yous walk through the entire history of the project filtering out, modifying, and skipping files co-ordinate to predefined patterns.

It is a very powerful tool one time you've identified where your repo is heavy. There are helper scripts available to place large objects, then that role should be like shooting fish in a barrel enough.

The syntax goes similar this:

git filter-co-operative --tree-filter 'rm -rf [/path/to/spurious/asset/folder]'

git filter-branch has a pocket-size drawback, though: in one case you lot apply _filter-branch_, you effectively rewrite the entire history of your project. That is, all commit ids alter. This requires every developer to re-clone the updated repository.

So if you're planning to carry out a cleanup action usinggit filter-branch, you should alert your team, programme a short freeze while the operation is carried out, and then notify everyone that they should clone the repository once again.

Tip: More on git filter-branch in this mail service nearly tearing apart your Git repo.

Alternative to git shallow-clone: clone simply one co-operative

Since git 1.vii.10, y'all tin can also limit the amount of history you clone by cloning a single co-operative, like and then:

git clone [remote url] --branch [branch_name] --single-co-operative [binder]

This specific hack is useful when you're working with long running and divergent branches, or if you accept lots branches and only ever demand to work with a few of them. If you only have a scattering of branches with very few differences you lot probably won't run into a huge difference using this.

Managing repositories with huge binary assets

The second blazon of big repository is those with huge binary assets. This is something many different kinds of software (and non-software!) teams encounter. Gaming teams accept to juggle around huge 3D models, web development teams might need to track raw image assets, CAD teams might need to manipulate and track the status of binary deliverables.

Git is not especially bad at handling binary assets, but it's non especially good either. By default, Git will compress and store all subsequent total versions of the binary assets, which is patently not optimal if y'all have many.

At that place are some bones tweaks that improve the state of affairs, like running the garbage drove ('git gc'), or tweaking the usage of delta commits for some binary types in .gitattributes.

Merely it's of import to reflect on the nature of your project'due south binary assets, as that will assistance you determine the winning arroyo. For instance, here are some points to consider:

- For binary files that modify significantly – and not just some meta data headers – the delta compression is probably going to be useless. So use'delta off'for those files to avert the unnecessary delta pinch piece of work as part of the repack.

- In the scenario above, it's probable that those files don't zlib compress very well either so you could plough compression off with'core.compression 0'or 'cadre.loosecompression 0'. That'south a global setting that would negatively touch on all the non-binary files that actually shrink well so this makes sense if you split up the binary assets into a dissever repository.

- t'due south important to remember that'git gc'turns the "duplicated" loose objects into a single pack file. But again, unless the files compress in some manner, that probably won't brand any significant departure in the resulting pack file.

- Explore the tuning of'cadre.bigFileThreshold'. Anything larger than 512MB won't be delta compressed anyway (without having to prepare .gitattributes) so peradventure that'south something worth tweaking.

Solution for big folder trees: git sparse-checkout

A mild aid to the binary assets problem is Git's sparse checkout option (available since Git one.seven.0). This technique allows to keep the working directory clean by explicitly detailing which folders you desire to populate. Unfortunately, it does not affect the size of the overall local repository, but can be helpful if you take a huge tree of folders.

What are the involved commands? Here's an example:

- Clone the total repository once:'git clone'

- Activate the feature:'git config core.sparsecheckout truthful'

- Add together folders that are needed explicitly, ignoring assets folders:

- echo src/ › .git/info/thin-checkout

- Read the tree as specified:

- git read-tree -m -u HEAD

Later on the above, you lot tin go back to use your normal git commands, merely your work directory will only comprise the folders you lot specified in a higher place.

Solution for controlling when you update large files: submodules

[UPDATE] …or you can skip all that and employ Git LFS

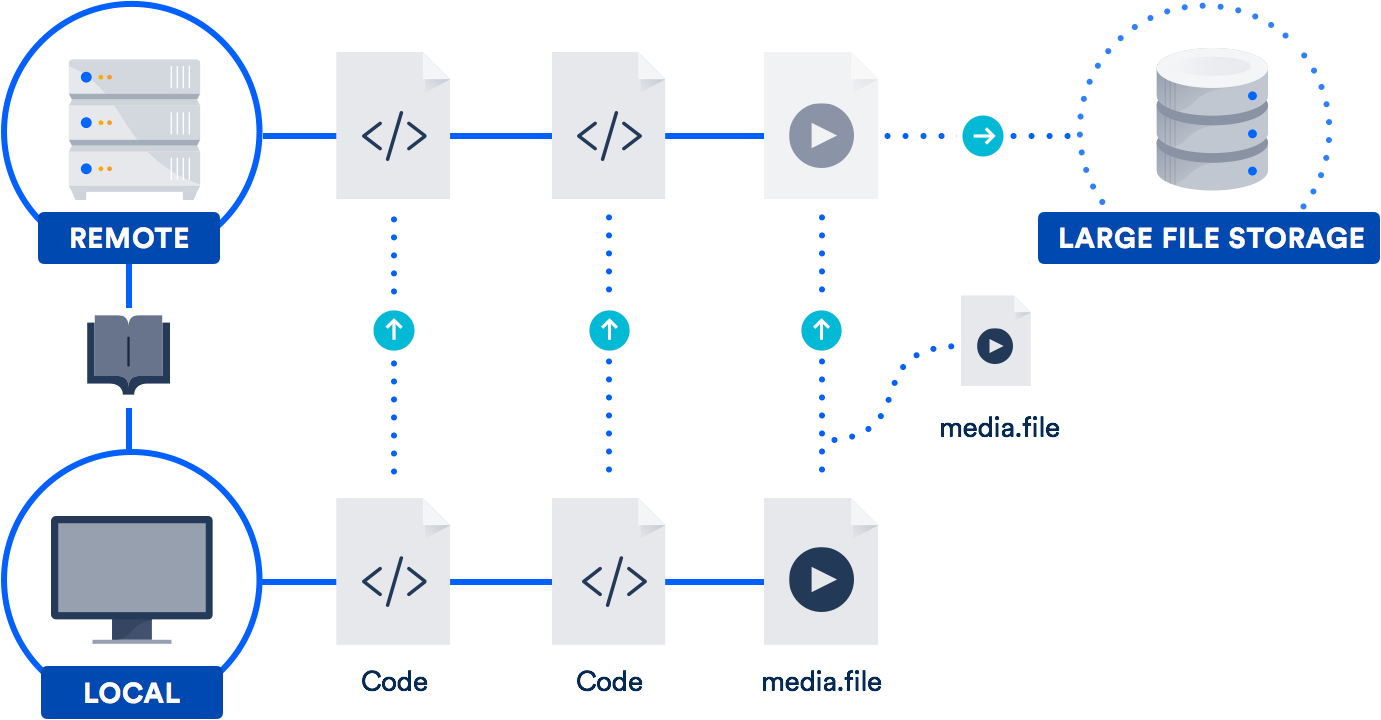

If you lot piece of work with big files on a regular ground, the best solution might exist to take advantage of the large file support (LFS) Atlassian co-adult with GitHub in 2015. (Yep, y'all read that correct. We teamed up with GitHub on an open-source contribution to the Git project.)

Git LFS is an extension that stores pointers (naturally!) to large files in your repository, instead of storing the files themselves in there. The bodily files are stored on a remote server. Equally you lot can imagine, this dramatically reduces the time it takes to clone your repo.

Bitbucket supports Git LFS, as does GitHub. So chances are, y'all already have admission to this technology. Information technology'south especially helpful for teams that include designers, videographers, musicians, or CAD users.

Conclusions

Don't give up the fantastic capabilities of Git but because y'all have a big repository history or huge files. There are workable solutions to both problems.

Cheque out the other articles I linked to above for more info on submodules, project dependencies, and Git LFS. And for refreshers on commands and workflow, our Git microsite has loads of tutorials. Happy coding!

Source: https://www.atlassian.com/git/tutorials/big-repositories

Post a Comment for "How to Download Git Repository Again"